We've got functions to read CSV data into data sets, and clean data sets.

Georgina

This is sort like a pipeline for data. Or a refinery.

What do you mean?

Georgina

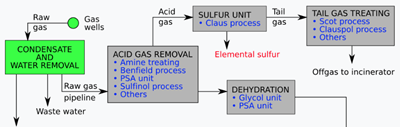

My aunt Tabitha is an engineer, works at a gas refinery. She showed me a diagram of a refinery once, though I didn't understand it all.

Gas refinery (Wikipedia)

You start off with the raw material, do something to it, and get stuff that's closer to the final product. You do something to that, getting something even closer. And so on.

Our code is like that. Got a raw CSV file. read_csv_data_set does something with it, making a list of dictionaries. clean_goat_scores does something to that, getting a step close. And so on, until the output.

That's a great metaphor, Georgina. In fact, data analysts talk about data pipelines, with step after step making the data closer and closer to what they can analyze.

Some people say a data pipeline is when you link together multiple programs, not sections within one program. However, conceptually it's the same thing.

Hmm. Thinking about pipelines helps make better sense of this part of the course. We have different kinds of functions we can assemble to make what we want. Each function is a data machine, that takes data in, and sends something out.

Bee tea dubs, I made up the term data machine (at least, I think I did) for this course. Your geeky friends at work won't know the term. It sounds kinda cool, though.

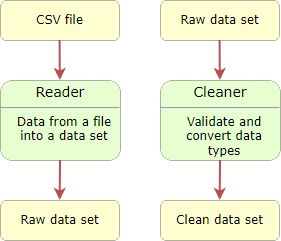

Let's keep track of different kinds of data machines. Here are two we have so far:

Data machines

We'll be able to tackle different tasks by putting them together.

Let's keep track of them in a data machines list.

Each machine will have a main function, but might have supporting functions as well. For example, Ray wrote this cleaning function.

- def clean_goat_scores(raw_goat_scores):

- # Create a new list for the clean records.

- cleaned_goat_scores = []

- # Loop over raw records.

- for raw_record in raw_goat_scores:

- # Is the record OK?

- if is_record_ok(raw_record):

- # Yes, make a new record with the right data types.

- clean_record = {

- 'Goat': raw_record['Goat'],

- 'Before': float(raw_record['Before']),

- 'After': float(raw_record['After'])

- }

- # Add the new record to the clean list.

- cleaned_goat_scores.append(clean_record)

- # Send the cleaned list back.

- return cleaned_goat_scores

It has support functions, like is_record_ok. is_record_ok doesn't do much by itself, but it's called by clean_goat_scores. It's part of the cleaning machine.

More machines

In the rest of the course, we'll make more data machines, each doing one thing. The "doing one thing" is important. Simple DMs are easier to think about, write, and debug. We can combine simple DMs to do whatever task we want.

Summary

- Metaphors can help you understand things.

- A data pipeline is a buncha data machines that take in data, transform it, and spit it out.

- We'll make functions for different types of machines.